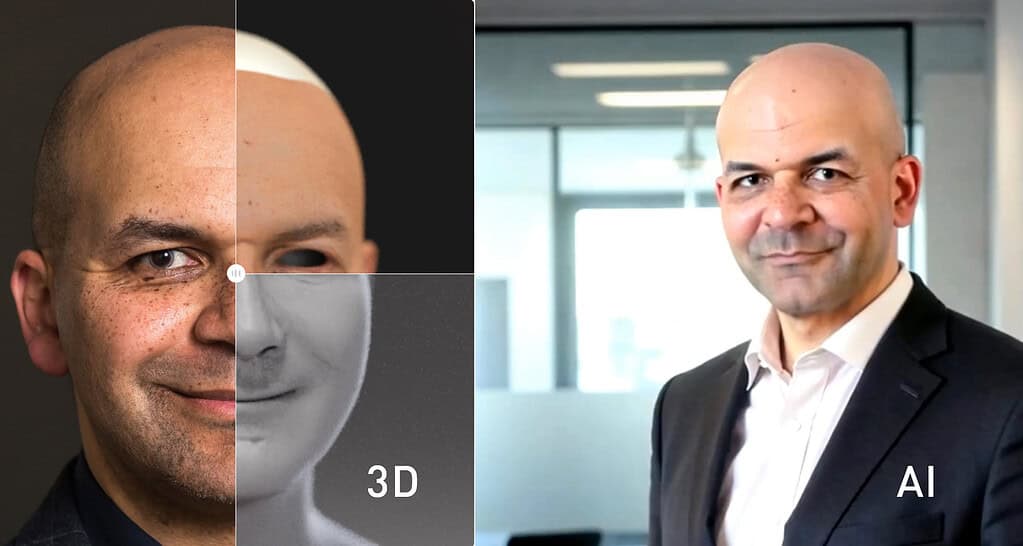

Digital avatars We encounter them more and more frequently - whether as virtual moderators in E-learning coursesas talking assistants on websites or as historical figures in museums. But behind the term "Avatar" are very different technologies. Two concepts in particular are often mixed up: the 3D-based avatar and the AI-generated avatar.

A 3D avatar is based on a fully modeled 3D character created with the help of game engines such as the Unreal Engine or Unity is created. The photorealistic system is particularly popular MetaHuman from Epic Games, which makes it easy to create believable faces.

For the integration of AI-driven conversation skills specialized SDKs are used in these engines:

Advantage: If they are well animated, 3D avatars can move realistically in space, perform gestures and react to their surroundings - for example in a virtual exhibition hall or a training session.

Point of criticism: The animation of a 3D avatar is complex. Many platforms only offer limited standard animations. If you want a really lively avatar, you have to invest in elaborate Mocap- or Keyframe animation and needs the corresponding know-how.

AI avatars are usually based on an uploaded photo or video, from which an AI generates a "Talking face" is generated. This technology is particularly popular for Web videos, Learning platforms or Social media.

These tools usually work browser-based and make it possible to create an avatar that acts in sync with the spoken text in just a few minutes. The content usually comes from a LLMthe voice is transmitted via a Text-to-speech system generated.

Advantage: Quickly produced and visually impressive - ideal for short Explanatory videos, Social media clips or simple Chatbot applications.

Point of criticism: AI avatars are functionally limited. They often appear wooden, cannot gesticulate or move freely in space. In XR environments they are therefore hardly useful.

AI avatars are also used as a visual interface for LLM-based chatbots for example on websites or in interactive information terminals. The avatar's mouth moves in sync with the spoken text, while the answers are generated by an LLM in real time.

Another area of application: Historical figuresthat are displayed as "talking busts" in museums or AR applications can be used. A digitally animated Einstein or Goethe narrates from the off based on facts - but the interaction remains one-sided.

Critical point: In immersive XR applications, such as in a digital classroom, an AI avatar is not enough. It cannot point at a whiteboard, gesticulate or react to physical user interaction. For interactive scenarios it is therefore unsuitable.

The question of the "better" avatar cannot be answered in a generalized way - it depends heavily on the respective Use case from.

A 3D avatar with real body language, gestures, animation and interactivity requires technical know-how, suitable tools and significantly more development effort.

AI avatars On the other hand, they are usually ready for use with just a few clicks - so-called "click-and-go" solutions. They offer a simple way of visual face for content, but without spatial depth or real interaction.

| Criterion | 3D avatar | AI avatar |

|---|---|---|

| Visual quality | Rendered in real time, possibly less realistic | Very photorealistic |

| Movement | Full body language, gesture-controlled | Limited, mostly only mouth movement |

| Spatial integration | Can be placed in 3D/XR worlds | No real spatial reference |

| Interactivity | Pointing, gripping, moving possible | Linear responses, no spatial interaction |

| Production costs | High, technical know-how required | Low, simple web interface |

| Field of application | XR, virtual showrooms, training | Web videos, chatbots, social media |

AI-generated avatars are usually created from individual photos or video sequences. This means that the facial expressions that the avatar can later show depend heavily on the source material. If someone is always smiling in the reference images, the AI avatar will also smile constantly - even if the content they are speaking is actually something serious. Conversely, a serious-looking avatar will not convey any warmth or lightness even when making friendly statements.

This means that AI avatars often appear mask-like: Facial expressions remain rigid or inappropriate, even when the lips move. The Body movements often appear uncoordinated - they do not follow any natural connection to speech. The avatar does move, but not like a human being consciously walking through the room. Gestures communicated.

In short: A AI avatar cannot interpret when which gesture or which expression makes sense - it simply does "something".

Of course, this does not mean that 3D avatars solve these problems automatically. Here, too, facial features must first be modeled, including finely defined Blendshapes or bone structures. And these too Facial expression must later be animated or tracked live - e.g. via Face capture or Keyframing. The same applies to the Body animationA 3D model without suitable movement data also remains lifeless.

The difference, however, is that with a 3D avatar you can use these Depth of expression technically - provided you are prepared to make the effort.

A AI avatar can be set up in half an hour - with just a few pictures and a few clicks, a "talking me" is created.

A 3D avatar with real Facial expression and natural gestures on the other hand, often takes several days of work - and well-founded 3D knowledge. Without the appropriate know-how, this implementation is hardly realistic.

In many cases, one Voice with voice control is completely sufficient - for example with Alexa, Siri or digital hotlines. The avatar is then just a visual add-on. The real innovation often lies in the Voice interfacenot on the face.

However, if a presentation, sales pitch or training course is to be simulated, an avatar with Body language and spatial presence real added value - this is where a well-made 3D avatar can score points.

AI avatars are ideal for fast, photorealistic faces in videos and simple chatbots. They often look impressive, but remain functionally limited - especially when interaction, movement and spatial reference are required.

3D avatars offer greater potential: they can be located in XR environments, can be freely animated and can interact with their surroundings. Their creation is more complex - but if you use them correctly, you can create Immersive and convincing experiences.

The central question remains: Is the avatar just a gimmick - or a real player? Only the latter justifies the effort.

A 3D avatar is a fully modeled character in 3D space that is rendered in a 3D editor or game engine, while AI avatars are usually AI-generated animated faces based on photos.

AI avatars are particularly suitable for short web videos, e-learning or social media.

The creation of a 3D avatar is technically demanding and requires tools such as Unreal Engine, MetaHuman or Character Creator, and motion capture is usually used for animation.

No, AI avatars are mostly 2D-based and not suitable for interactive 3D scenarios.

Synthesia, D-ID and HeyGen are leading providers for the rapid creation of browser-based AI avatars. The videos created in these programs can then be downloaded.

Are you interested in developing a virtual reality or 360° application? You may still have questions about budget and implementation. Feel free to contact me.

I am looking forward to you

Clarence Dadson CEO Design4real